The following instructions are intended for new users of the HoRUS cluster. Some basic terms are introduced and links to more information are provided. Specifically, it is explained what a cluster is, how usage differs from a normal computer, how you get onto the cluster and where to turn if you have questions.

What is a cluster?

A cluster is essentially a large computer that is made up of many smaller computers. The smaller computers are called nodes, each node has its own RAM (memory) and a specific number of processors, also called cores. The OMNI cluster has about 450 compute nodes for running computations, 4 login nodes which are directly accessible to users, and a number of specialized nodes. The normal compute node have 64 CPUs and 256 GB RAM each, a detailed list is here. On the OMNI cluster, individual nodes do not have their own hard drives, rather they share a central file system.

Linux

Our cluster, like almost all clusters, is operated with Linux. If you do not know Linux well (yet), we offer multiple ways of familiarizing yourself.

First, we offer a Linux introduction course each semester, alternating between German and English. Our English-language Linux course usually takes place in mid-July. You can find a list of our courses here.

Second, ZIMT, in cooperation with other universities in Northrhine-Westphalia, has created the HPC Wiki, which contains a Linux video tutorial among other information.

Finally, we explain some basic terms on our Linux page as well.

Differences between clusters and normal computers

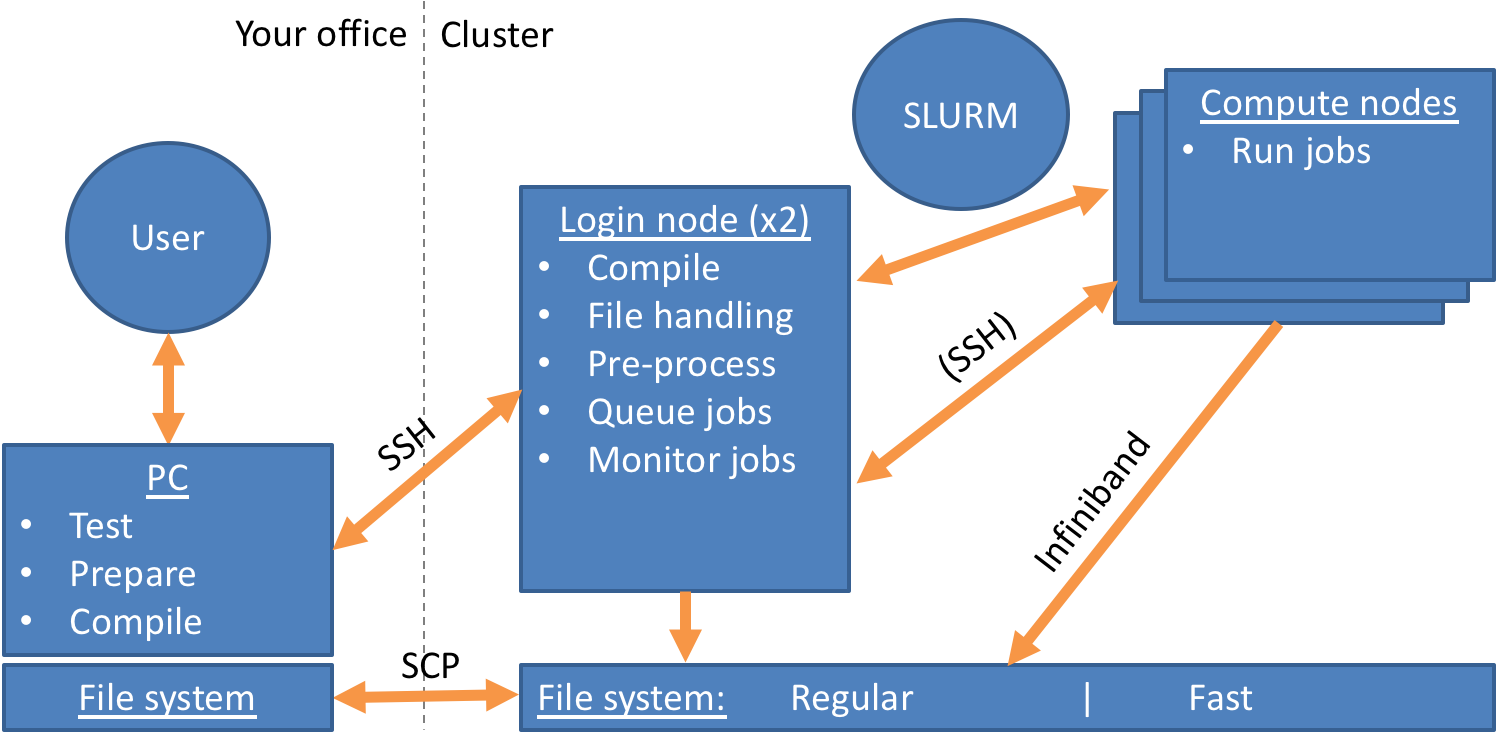

Many things about a cluster are identical to any other (Linux) computer. There are however some differences. The most important one is the fact that you do not sit in front of the cluster, but connect to it from another computer. This is shown in the following image:

As you can see, you always connect to one of the login nodes. You typically only interact with compute nodes via the scheduler SLURM, which is described below. You can also see the shared file system.

Here are some additional differences:

Workspaces

Like on any Linux system, you have a home directory on the cluster. However when you run computations, you should create a so-called workspace. Workspaces are physically on another part of the file system which has a faster connection to the compute nodes. They are also unlimited in size, unlike your home directory. They have a limited duration however and are deleted after a number of days. You can find more about workspaces here.

The OMNI cluster also has a so-called burst buffer, which is a partition of SSDs (solid-state drives) that are intended for jobs that read or write a large number of files. We describe here how to use the burst buffer.

Modules

Operating systems like Linux use so-called environments in order to decide which program is run when a specific command is typed. Paths to executable files and various settings, among other things, are stored in environment variables. Since many users with different needs work on a cluster, often different versions of the same software are installed. If everyone used the same environment, commands would then be ambiguous. The environment on the cluster is therefore modular and can be exchanged easily.

For many programs installed on the cluster, environments are pre-defined in so-called modules. You can then simply load a module to obtain the environment. How to do that is described here.

How to get onto the cluster?

The cluster is available to university members free of charge. Employees can also enter students who may then use the cluster as well. All you need to do is register your user account for cluster usage and set up a connection to the cluster.

Registering your account is described for both employees and students here.

Connecting happens via the Secure Shell Protocol (SSH) and is in principle possible from Windows, Linux and Mac OS systems. Setting up an SSH connection is described here.

The address of the cluster will be e-mailed to you upon registration. The cluster can be reached from inside the university network or Uni VPN by default. There are ways to enable access without the Uni VPN as well.

How do I run computations on the cluster?

Computations on the cluster are run in so-called jobs. You define how many resources (CPUs, RAM) your job needs and for how long. You also typically provide a job script which details which program(s) are to be run in the job. You then put the job into a waiting queue und the Scheduler SLURM decides, based on job size and other factors, when to run it. How to create job scripts and queue jobs is explained here, more information on SLURM is here.

Help and questions

The cluster website covers the most important topics and provides links to the documentation of installed software. If you are looking for help for a specific Linux command, built-in help functions are also available, especially the manual function of Linux, which you can access with the command man <command name>. If the developer has written a man page for their software, it is then displayed.

If the website does not answer your question, you can send an e-mail to hpc-support@uni-siegen.de or visit our weekly consultation hour. The same e-mail address is also for reporting problems and software installation requests on the cluster.

ZIMT also offers a training course schedule concerning high performance computing and cluster usage. Upcoming courses are listed here.

Additionally, ZIMT offers consulting for development and optimization of your software. ZIMT experts are available to review your software or otherwise advise you in person. If you would like consulting, you can also send an e-mail to hpc-support@uni-siegen.de.